“Humans didn’t learn to swim by reading” — AI in mobility goes physical, from language to action

Three major autonomous driving developers unveil models trained on video

Published on Jan. 09, 2026

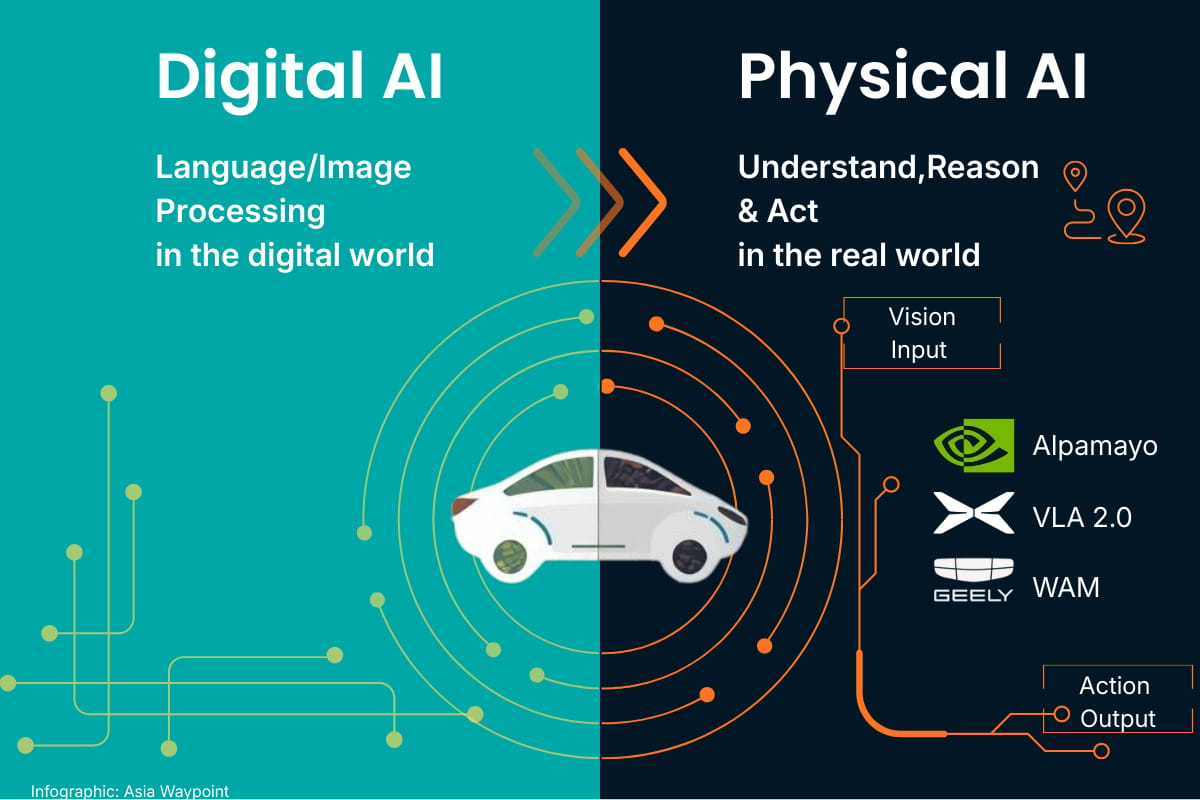

A shift from language to action is reshaping autonomous driving. Several major players both in China and the United States have launched new technology stacks for autonomous driving that are betting on world models that learn visually through experience, not just data.

The Chinese e-vehicle startup Xpeng launched its new large model VLA 2.0, saying it was trained on nearly 100 million video clips containing an equivalent number of “extreme driving scenarios a human driver would face in 65,000 yearsa".

The company's founder and CEO He Xiaopeng called it a breakthrough and predicted "a new dawn for autonomous driving in China and the US" at the 2026 Xpeng Global New Product Launch held in Guangzhou on January 8, 2026, reported the Chinese car portal Gasgoo. (In Chinese)

Xpeng's VLA 2.0 is reported to run on 72 billion parameters and the company claims it to be a system that "truly understands the physical world in a human-like way". By removing the "language translation" step inherent to traditional VLA models, the new VLA 2.0 model "enables direct visual-to-action generation".

Language is no longer used as a tool for training, but only as an additional source of instructions while driving. He Xiaopeng calls this a "fundamental shift—from the age of digital intelligence to a new paradigm where AI directly shapes the physical world".

While switching from the traditional, language-based model training to using videos, Xpeng made the discovery that its new world model was showing signs of "emergence", which in machine learning describes the moment when the AI starts to "learn by itself", imitating actions through experience rather by following clear instructions.

One example was that the test cars started to "creep" when approaching intersections, without anyone explicitly telling them to, which is called a "black hole effect". Another example was the "ant colony effect", where AI agents communicating locally showed adaptive intelligence without centralised command.

Xpeng said it has won Volkswagen as a launch partner for the new technology. There is also a concrete roadmap for its roll-out. Road tests with robotaxis equipped with the VLA 2.0 model will start soon and three robotaxis with the AI model are scheduled to be launched this year, the company announced.

The ChatGPT moment for physical AI is here.

Nvidia founder Jensen Huang revealed that his company is following the same technological route for autonomous driving when he announced Nvidia's entry into the autonomous driving industry at the CES in Las Vegas on January 5, 2026.

"The ChatGPT moment for physical AI is here - when machines begin to understand, reason and act in the real world," said Jensen Huang, founder and CEO of Nvidia, according to a Nvidia press release.

Alpamayo was trained end-to-end, "literally from camera-in to actuation-out", signalling a similar strategy for the future of autonomous driving systems as Xpeng. Huang also announced that his new driver assistance software will be featured in the upcoming Mercedes-Benz model CLA.

Geely, China's second largest vehicle maker, also chose the CES as the stage to reveal its latest advancements in the area of AI plus mobility. Geely launched its full-domain "World Action Model" (WAM), saying that it enables cars to "gain an evolving worldview and judgement".

WAM combines a multimodal large language model (MLLM) and a world model for predictive simulations, capable of "millisecond-level physical simulations of action sequences", Geely said. Its G-ASD (Geely Afari Smart Driving) driver assistance system. The company described it as a solution capable of "end-to-end self-correction", resulting in a car that "understands, jugdes and evolves on its own".

What all of these new announcements by Xpeng, Nvidia and Geely have in common is that they point to a more rapid roadmap towards autonomous driving at Level 4 that seemed to be possible a short while ago. With the language layer removed during training, the AI models are much faster in "learning" driving behaviour, all of these developers claim.

This amounts to a big shift away from large language models as the holy grail of AI. “Humans don’t learn to swim from reading. We learn by sensing,” He Xiaopeng of Xpeng said.

This seems to confirm what one of the pioneers of artificial intelligence has said for quite some time. Fei-Fei Li, a professor of artificial intelligence at Stanford University, has long advocated the visual development route over language-centred LLMs.

“Complex language is unique to humans and evolved in less than 500,000 years", said Fei-Fei Li, "but biological understanding of, interaction with, and communication in the 3D world evolved over 540 million years". AI will remain incomplete until the fundamental problem of spatial intelligence is solved, she thinks.

At the beginning of this year, major movers and shakers in the automotive industry in China and the United States have started to concur.